InCiSE Index

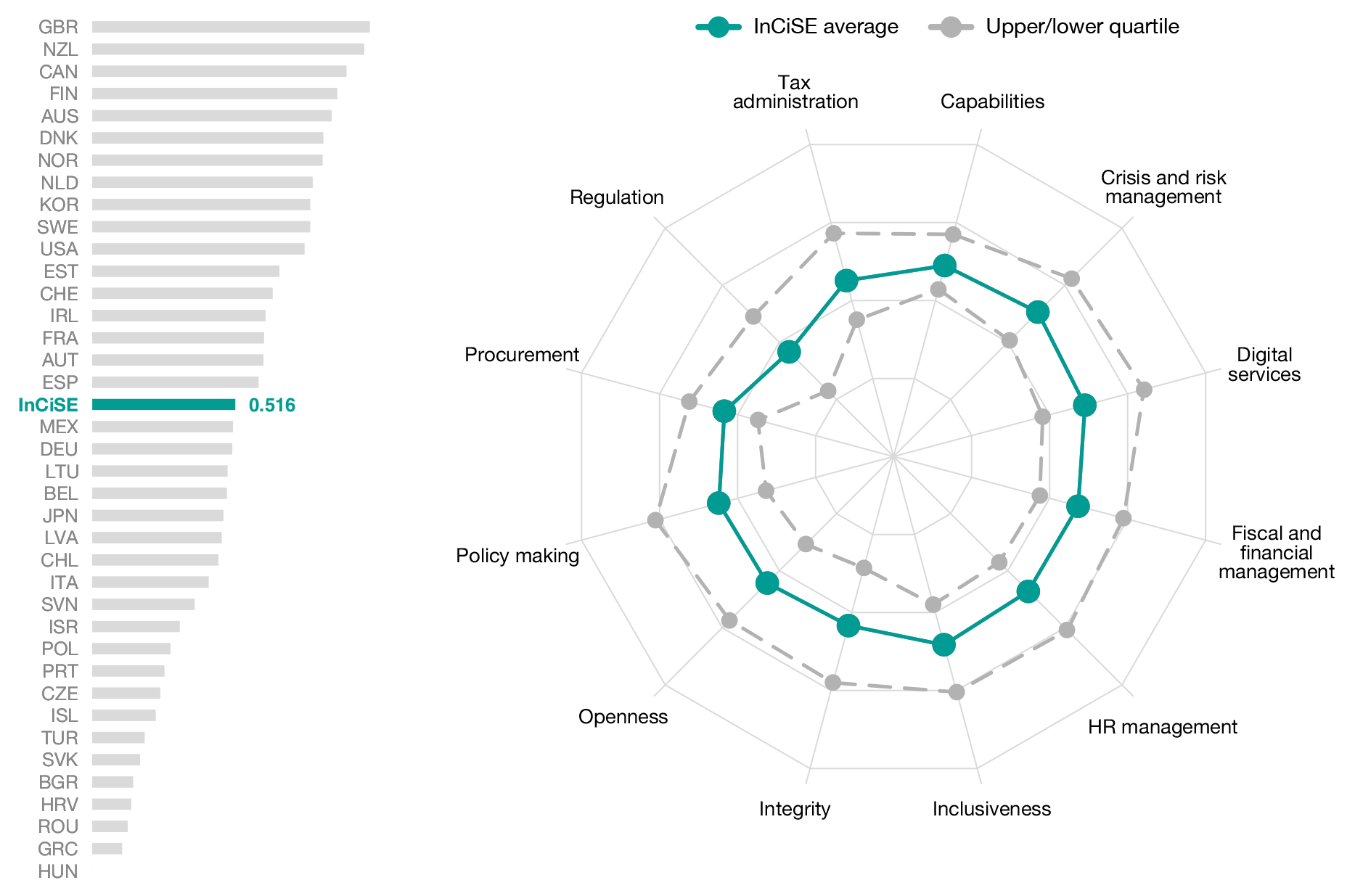

Benchmarking civil service performance across OECD and EU countries.

About this project

From 2017 to 2019 I led the UK Civil Service’s involvement in the International Civil Service Effectiveness (InCiSE) partnership, a collaboration with the Institute for Government and the Blavatnik School of Government, University of Oxford1.

While several projects for comparing public governance across different countries already existed the specific aim of the InCiSE index was to focus on appraising and assessing public administration capacities. The goal being to provide information on the performance and functioning of civil services, to improve accountability and knowledge exchange. Other indices either combined assessments of public administration with other important governance topics (e.g. quality of democracy and/or policy performance) or focussed on specific topic areas (e.g. open data, budgeting, anti-corruption).

The InCiSE partnership published the pilot edition of the index in early 2017. I joined the project in autumn 2017 to lead the technical analysis for the next edition of the index, which was published in 2019. My responsibilities in the project included: improving the methodology of the index and its technical development, authoring the technical report, ensuring robust quality assurance of the model, and presenting the work to a wide range of stakeholders from administrations around the world.

Improving methodology and reproducibility

The 2017 pilot edition was developed through analysis in Microsoft Excel supported with some modelling in Stata. This approach not only leads to potential issues due to switching across software packages, but due to technical limitations of these software several stages of the data processing and modelling relied on manual copy/paste transfers of data. This introduces significant opportunities for error, especially when coupled with manual version control practices.

To overcome these issues the modelling was re-written from first principles using R, a statistical programming language. As open source software this also increases the opportunity for transparency and reproducibility2. Adopting a code-based approach to the analysis allowed for strong version control via Git to be implemented, as well a enabling a more robust approach to imputation for handling missing data to be implemented.

I introduced a ‘data quality assessment’ component to the modelling that provided a quantitative appraisal of the data quality of both countries and indicators. This allowed a more sophisticated and data-driven approach to be taken to country selection, supporting an increase in country coverage from 31 to 38 countries. This approach also allowed data quality considerations to be taken into account within the weighting of the final index scores.

An additional benefit of these changes was the ability to substantially increase the number of tests included in the sensitivity analysis of the index results, up from 7 tests in the 2017 pilot to 20 tests in the 2019 edition. Thereby giving greater confidence in the final results.

Improving data coverage

The other aspect of improvements to the technical modelling was on the range of source data used in the index and its pre-processing. The number of data points contributing to the InCiSE index rose to 116 in the 2019 edition, up from 76 used in the 2017 pilot (of which 70 were carried through to the 2019 edition). A majority of the additional data points used in 2019 (30 out of 46) came from a review of existing data sources, the others came from new data sources published since the release of the 2017 pilot.

I identified ways to improve the representation of data on crisis and risk management combining data from the existing data source with new OECD data, to provide a more holistic measurement of the concept rather than the natural disaster focus of the 2017 pilot. I redesigned the capabilities indicator to make use of a wider range of data points from the source data and produce more targetted estimates of the population of interest (public administration officials). I changed the way the source data on digital services was used by the model to better represent those services most likely to be delivered at the national government rather than by other public sector bodies. I also identified data sources that would allow the procurement domain of the InCiSE framework to be measured for the first time, combining data from an OECD assessment with open data published by public procurement organisations.

You can read more about the InCiSE project and download the reports from the Blavatnik School of Government’s website.

The Institute for Government and Blavatnik School of Government received funding from theOpen Society Foundations to support their participation in the partnership. ↩︎

There is an intention to put the source code for the 2019 edition of the model in the public domain. ↩︎